My second year at AWS: down the rabbit hole

We all know, time flies. I posted about my first year at Amazon Web Services just 12 months ago and now I’m already celebrating my second AWS-birthday.

It’s been a year of both personal and professional growth: it began in December when I became head of a project that has been helping some of our customers running their platforms at massive scale, all the way up to my promotion to Senior TAM a few months back. Needless to say, customers I’m looking after have made giant leaps too, as part of transformation processes that start from the infrastructure and can reach up to their corporate culture.

My post about “Year One” was organised by subsequent evolutional phases but this won’t really make sense from the second year onward: I’ve been involved in a bunch of projects, every one of them with its own life. Why not trying then to recap the last 12 months by picking the project (more details on it here, with a cameo appearance) that took most of my time and checking our slogan “Work Hard, Have Fun, Make History” has been truly met in it? Let’s start here.

Work Hard

This is how it begins. It might seem obvious, as no one ever will pay us to do something other than working hard, but it’s not. Working hard in AWS means taking responsibilities, being effective, facing challenges and turn every opportunity into an huge success.

“Hard” as in pushing our brains to 100%, not necessarily as in working 16 hours a day. True, we carry pagers, and might end up having late evening calls with the teams in Seattle or doing late night debugging sessions from our hotel room, but this only happens in exceptional situations.

I personally find this extremely rewarding: when you focus on a project with all your energy, then the sense of achievement when it’s done is super strong.

…check ✓

Have Fun

“Having Fun” is something we keep reading in job offers: it’s a “new economy” concept, meant as enjoying what you do and finding personal motivation in addition to the obvious business one.

I find this kind of comes by itself: if you work effectively on something and achieve results, then customers will trust you, the relationship will become more friendly and relaxed and you will end up having a lot of fun with them, even in the day to day.

Check ✓

Make History

Last one, and possibly just another consequence. Is there any other way a successful project can finish?

Sometimes we might not realise how big a given change can be. We might focus on some virtual machines becoming EC2 instances and some hard drives becoming S3 partitions, but there’s much more behind the curtains: you will see a quickly changing and moving world there.

Never underestimate the importance of small actions and small steps as they can quickly prove to be giant leaps.

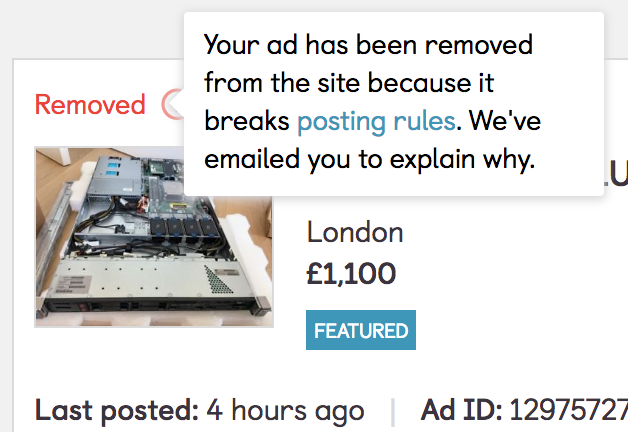

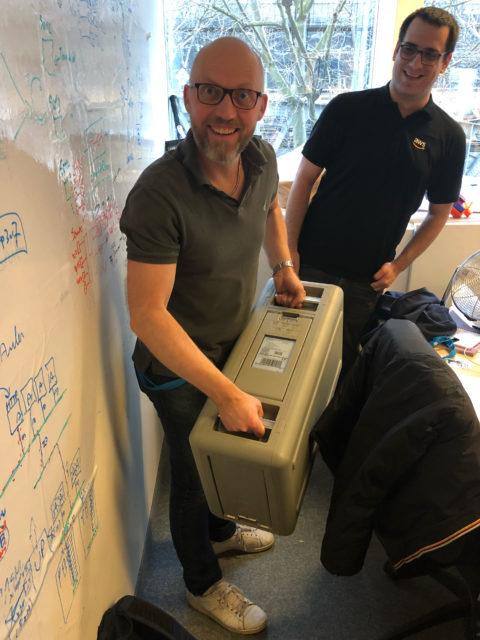

(in the picture below you can see me helping with the final step of a cloud migration: loading a massive storage array on a truck after datacenter decommissioning and shutdown) …check ✓

So What?

Two years in, and for me it still feels like it’s Day One. Learning something new every day, consciously jumping in rabbit holes every other day just to re-emerge stronger and wiser later on. Being surrounded by the smartest people on earth makes you feel extremely small sometimes, but also guarantees you endless opportunities for growth.

This is what I’ll keep doing.

(want to join the band? just ping me!)